Image Production with the Help of Artificial Intelligence

How imagejet is revolutionizing image production with new workflows

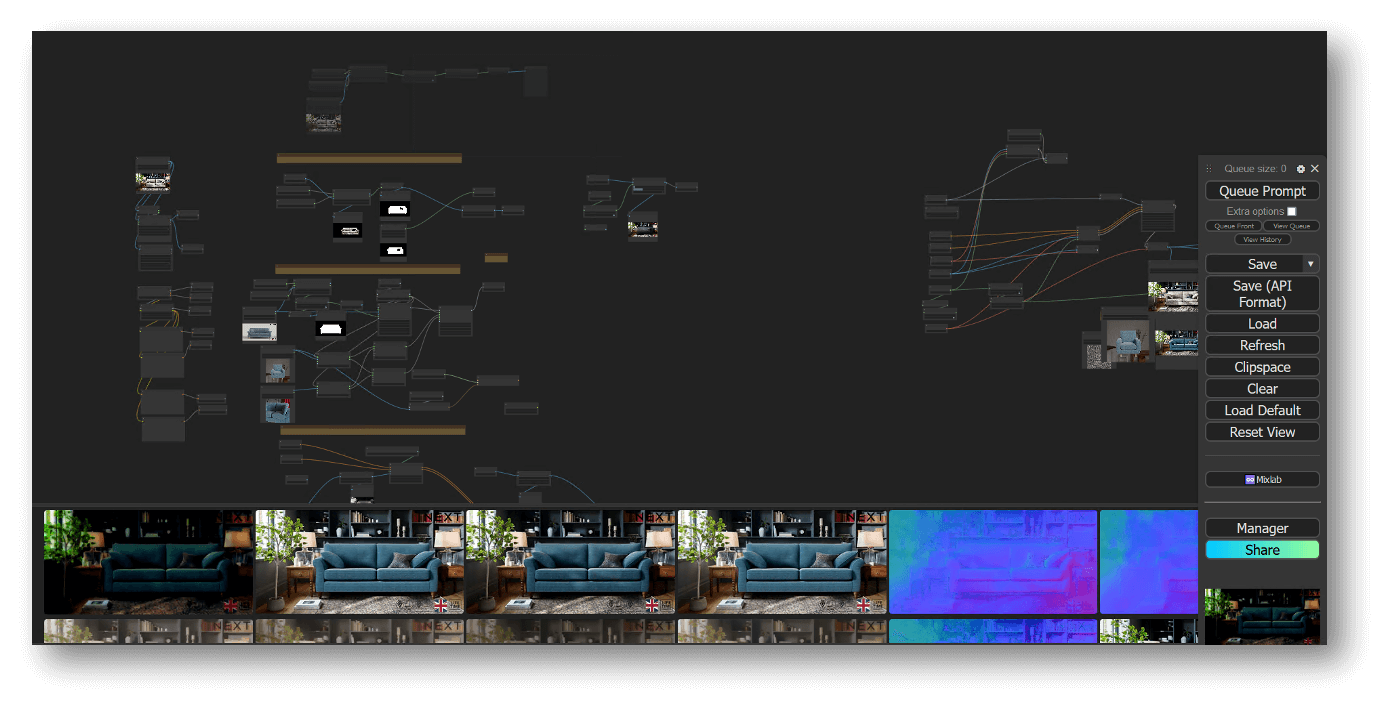

Over the past few years, image production for our customers has become even more efficient through the use of Generative Artificial Intelligence (GenAI), which has developed rapidly. Arvato Systems used to focus on a systematic approach in which customers provided Excel spreadsheets with product variants. This information was then combined with 3D models, materials, and scenes to render appealing images. To meet growing demands and make the most of new technologies, we have now integrated innovative GenAI methods into our workflows. Powerful tools such as ControlNet, Stable Diffusion, and LoRAs are used in conjunction with ComfyUI. LoRAs (Low-Rank Adaptation) is a revolutionary technique for model fine-tuning that allows us to optimize large models with significantly fewer parameters and thus use them efficiently for specific tasks.

This article introduces the four novel workflows revolutionizing image production with imagejet. imagejet revolutionize image production.

Workflow I: Generation of Training Data with Imagejet

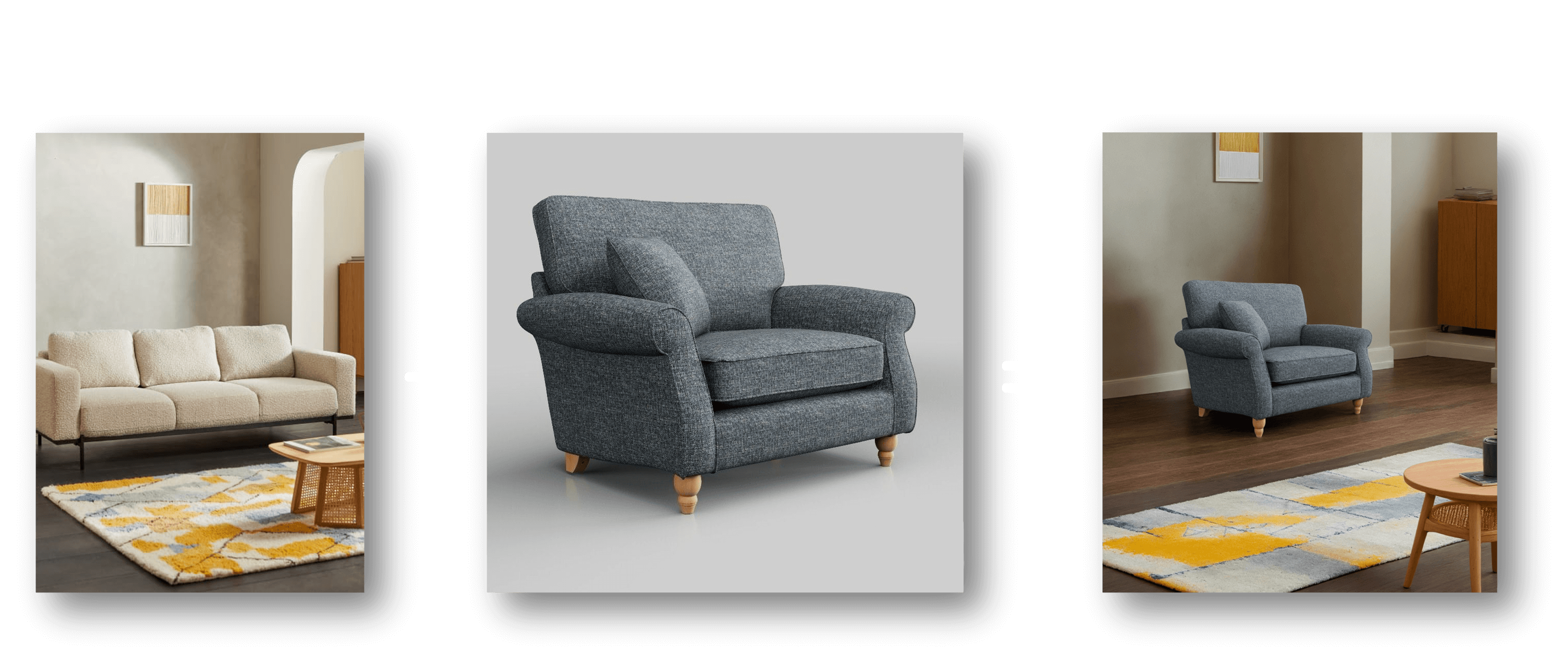

The first workflow involves generating training data with imagejet to train LoRAs or Stable Diffusion models. These models allow us to change materials on sofas in images or replace the sofa itself in a scene.

Steps in workflow I:

- Data acquisition: Comprehensive collection and categorization of images and textures. This includes the creation of computer graphics (CG), which were traditionally rendered in image production with imagejet, as well as the use of existing archive data and collections in the customer's inventory.

- Labeling: Precise labeling of each image to ensure effective model training.

- Training data generation: Using imagejet to create extensive training datasets. This includes creating large and varied sets of discussed images from which the models can learn.

- Model training: Training of LoRAs and stable diffusion models with the generated data. This involves teaching the models how to perform tasks such as material replacement or scene adjustments successfully.

This workflow allows us to achieve a high level of precision in exchanging materials and the customization of the sofa in different scenes. The use of imagejet has proven to be highly efficient and provides a scalable solution for the generation of training data.

Workflow II: Automated Image Exchange Process

In the second workflow, we use the trained LoRAs and have developed an automated process to refine images quickly and efficiently using inpainting.

Steps in workflow II:

- Use of the model: Implementation of the trained LoRAs.

- Automation: Development of an automated system to perform tasks such as inpainting or changing styles and materials for image content. The system can automatically modify parts of an image or apply new textures and designs to objects.

- Validation: Checking the modified images for consistency and quality.

This process allows us to quickly meet our customers' requirements and generate varied material and color combinations without manual effort. Automation leads to significant savings in time and resources and ensures that the results meet the highest quality standards.

Workflow III: Using Stable Diffusion for Scene Creation

The third workflow combines existing background images with Stable Diffusion to recreate them as accurately as possible. A rendering is then created in a studio scene, and the sofa is integrated into the newly created scene so that the scene no longer needs to be built in 3D.

Steps in workflow III:

- Background analysis: Analyze the existing background image.

- Stable Diffusion: Using Stable Diffusion to recreate the background as accurately as possible.

- Placing the sofa: Rendering the sofa in a studio scene and seamlessly integrating it into the redesigned background.

- Fine-tuning: Adjusting lighting and shadows to create a realistic image.

This workflow offers a cost-effective alternative to traditional 3D scene development and allows flexibility to adapt to different environments. Combining Stable Diffusion with studio renderings leads to impressive and realistic results.

Workflow IV: Creation and Use of 3D Avatars

The fourth workflow involves creating 3D avatars, animating them with NVIDIA ACE, and animating them in real-time with LipSync. In combination with a custom Large Language Model (LLM), these avatars can be used as company representatives for first-level support.

Steps in workflow IV:

- Avatar creation: Designing appealing and realistic 3D avatars.

- Animation: NVIDIA ACE is used to animate the avatars.

- LipSync: Real-time LipSync gives the avatars a natural way of speaking.

- LLM integration: Integration of avatars into a customized LLM to ensure efficient and legally compliant communication with customers.

This workflow offers an innovative solution for customer service and enables direct interaction with customers through virtual representatives. The use of these avatars can increase support efficiency and ensure consistent communication.

Conclusion

The integration of GenAI into image production processes has led to significant advances in efficiency, flexibility and quality. By carefully developing and implementing the four workflows described above, we are able to meet the growing demands of our customers and effectively utilize the latest technologies. The use of imagejet to generate training data, the automation of the image exchange process, the combination of stable diffusion with studio renderings and the development of interactive 3D avatars demonstrate the impressive possibilities of GenAI in contemporary image production.

Written by