How to Successfully Deploy Serverless Architectures

Serverless architectures promise improved utilization, shortened time-to-market cycles, scalability and resilience. However, building a serverless solution presents its own challenges - especially for teams more familiar with traditional setups.

Function as a Service and the Principles of Developing Good Cloud Apps

A serverless application is typically event-based. Events can be, for example, an HTTP request from a client, a status change in a database, a window from a stream, or a message from an IoT device. A FaaS (Function as a Service) is used to record the event. Cloud service providers (CSPs) generally support a variety of programming languages: AWS offers the AWS Lambda data processing service, and Microsoft offers Azure Functions.

Developers of serverless applications can - instead of working on infrastructure-related code - fully concentrate on the functionality of their application, because the CSPs take care of the provisioning, scaling and security of the environment.

Of course, in most cases, the functionality and logic of an application depend on the data that is available and needs to be processed. To enable horizontal scaling, states should not be added to functions (twelve-factor app). It is better if the application interacts directly with other services such as databases, caches or search engines.

Serverless Architecture Using the Example of an API-Based Web Application

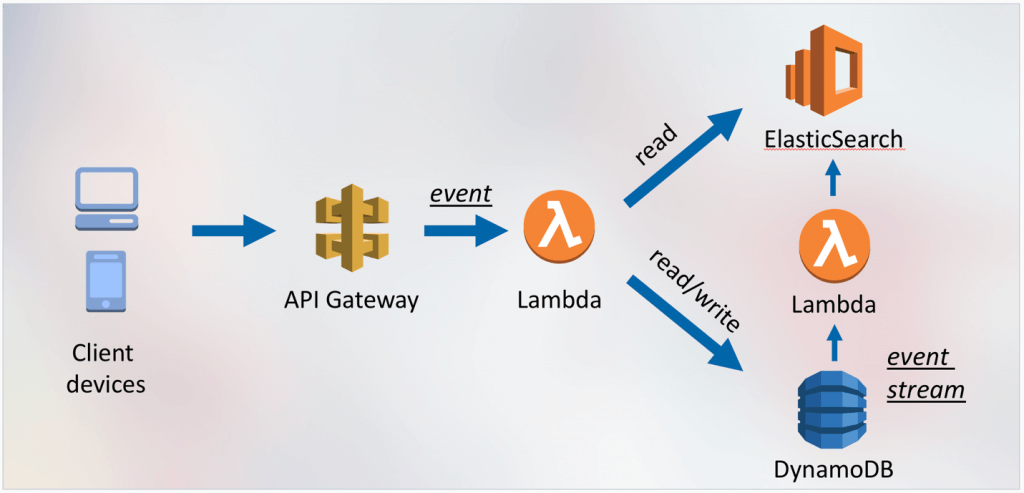

All requests from clients (browsers, native applications, etc.) are directed to the API gateway. The gateway handles issues such as authorization and rate limiting. Lambda functions are available through a RESTful API: When a request is received, it is translated into an event and calls a Lambda function. This setup also allows building a microservices architecture, as different functions (or even different systems) can be assigned to each REST endpoint and method. The Lambda functions execute the business logic and interact with a database. In this example, we use DynamoDB, a highly scalable NoSQL database service. The function scales automatically and is billed based on the read and write capacity units provided.

Although DynamoDB provides fast and durable data storage, we still lack advanced query capabilities. Therefore, for full-text search and query aggregation, we rely on ElasticSearch, a "search engine-as-a-service."

To synchronize data from the database with the search index, we again rely on Lambda. By registering a Lambda function in the DynamoDB event stream, our function receives an event for all database updates (create, update, delete) and updates the search index accordingly. What's particularly convenient about this is that both the database and our Lambda function automatically scale as needed, and we don't have to worry about updates or the like.

There is one caveat, however: contrary to what the name suggests, the ElasticSearch service currently offered by AWS is less elastic than Lambda and DynamoDB. To be sure, the ElasticSearch servers are managed for us. Also, the instance types and number of instances are configurable. But they do not scale automatically. This can result in DynamoDB and Lambda scaling seamlessly during a traffic peak - but ElasticSearch having to shut down because it wasn't provisioned with enough resources.

Of course, you can write your own scripts to dynamically adjust the configuration or set up a queue to throttle requests. It's just that this goes against the serverless principles, as it creates overhead and complexity for us. That's why I hope AWS expands its Serverless offering with ElasticSearch - for example, with the recently announced Aurora Serverless feature. (Speaking of which this feature offers a good alternative to the setup described here if you prefer SQL-based databases).

Serverless and the Challenge for Traditional Organizations

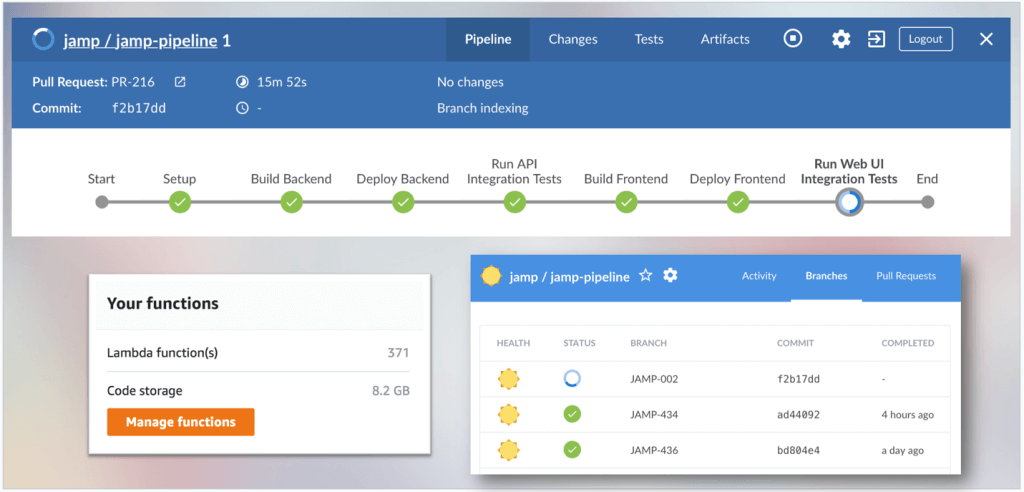

To make the most of the serverless concept, as much effort as possible should be automated. Especially when using FaaS, traditional teams often reach their limits: The number of functions quickly grows beyond the point where manual creation, updating or deletion is still possible.

Also, the more cloud services you use, the harder it becomes to run applications locally. Thus, when using mocks, small differences in behavior often lead to different results between the local and cloud environments. Deploying cloud environments for testing can help: For example, we run a setup where a newer stack is automatically created in the cloud when a new feature branch is created in our Git repository. This allows for isolated development and testing. After the feature is integrated into the main branch, the temporary environment is automatically deleted.

Serverless components already come with all the necessary APIs and templates and are thus ideally designed for automation. In order to successfully deploy serverless architectures, user teams need to get to grips with them intensively. They need to be more involved in infrastructure-related scripting or templating - which is common when working together as a DevOps team, but is a challenge for more traditional teams at first.

Written by